Human-in-the-Loop Learning

User Studies:

Human-in-the-Loop Data Collection Using Wearable Sensors and Mobile Devices for Machine Learning Algorithm Design

This research study aims to collect sensor data that will be used to develop machine learning algorithms. We want to collect data from a set of typical activities of daily living. The data collected in this study will be fully de-identified. You are being asked to take part because you are eligible to participate in this experiment. You will be considered eligible if you 1) are 18 years of age or older, 2) speak English, 3) have the desire to participate in data collection, and 4) have an Android smartphone with data plan. You are not eligible to participate if 1) you have severe cognitive, hearing, visual, or mobility impairment that would impact your ability to complete study procedures. We will collect data from 20 individuals in this study.

- Flyer

- Interested? Fill this screning survey.

- Consent Form

Related Research Papers:

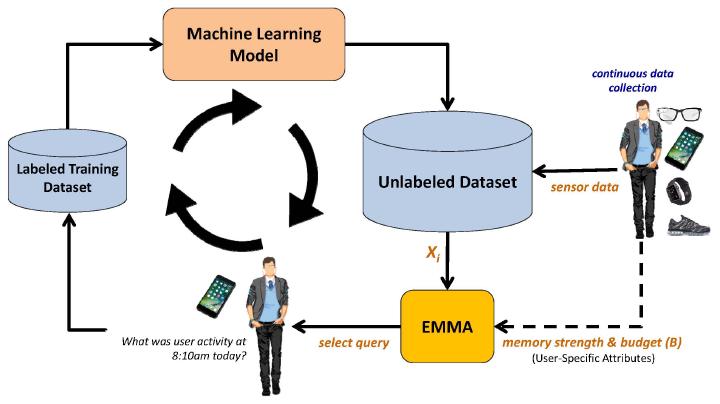

Mindful Active Learning

We propose a novel active learning framework for activity recognition using wearable sensors. Our work is unique in that it takes limitations of the oracle into account when selecting sensor data for annotation by the oracle. Our approach is inspired by human-beings' limited capacity to respond to prompts on their mobile device. This capacity constraint is manifested not only in the number of queries that a person can respond to in a given time-frame but also in the time lag between the query issuance and the oracle response. We introduce the notion of mindful active learning and propose a computational framework, called EMMA, to maximize the active learning performance taking informativeness of sensor data, query budget, and human memory into account. We formulate this optimization problem, propose an approach to model memory retention, discuss the complexity of the problem, and propose a greedy heuristic to solve the optimization problem. Additionally, we design an approach to perform mindful active learning in batch where multiple sensor observations are selected simultaneously for querying the oracle. We demonstrate the effectiveness of our approach using three publicly available activity datasets and by simulating oracles with various memory strengths. We show that the activity recognition accuracy ranges from 21% to 97% depending on memory strength, query budget, and difficulty of the machine learning task. Our results also indicate that EMMA achieves an accuracy level that is, on average, 13.5% higher than the case when only informativeness of the sensor data is considered for active learning. Moreover, we show that the performance of our approach is at most 20% less than the experimental upper-bound and up to 80% higher than the experimental lower-bound. To evaluate the performance of EMMA for batch active learning, we design two instantiations of EMMA to perform active learning in batch mode. We show that these algorithms improve the algorithm training time at the cost of a reduced accuracy in performance. Another finding in our work is that integrating clustering into the process of selecting sensor observations for batch active learning improves the activity learning performance by 11.1% on average, mainly due to reducing the redundancy among the selected sensor observations. We observe that mindful active learning is most beneficial when the query budget is small and/or the oracle’s memory is weak. This observation emphasizes advantages of utilizing mindful active learning strategies in mobile health settings that involve interaction with older adults and other populations with cognitive impairments.

Human-in-the-Loop Learning for Personalized Diet Monitoring from Unstructured Mobile Data

Lifestyle interventions with the focus on diet are crucial in self-management and prevention of many chronic conditions such as obesity, cardiovascular disease, diabetes, and cancer. Such interventions require a diet monitoring approach to estimate overall dietary composition and energy intake. Although wearable sensors have been used to estimate eating context (e.g., food type and eating time), accurate monitoring of dietary intake has remained a challenging problem. In particular, because monitoring dietary intake is a self-administered task that involves the end-user to record or report their nutrition intake, current diet monitoring technologies are prone to measurement errors related to challenges of human memory, estimation, and bias. New approaches based on mobile devices have been proposed to facilitate the process of dietary intake recording. These technologies require individuals to use mobile devices such as smartphones to record nutrition intake by either entering text or taking images of the food. Such approaches, however, suffer from errors due to low adherence to technology adoption and time sensitivity to the dietary intake context. We introduce EZNutriPal, an interactive diet monitoring system that operates on unstructured mobile data such as speech and free-text to facilitate dietary recording, real-time prompting, and personalized nutrition monitoring. EZNutriPal features a natural language processing unit that learns incrementally to add user-specific nutrition data and rules to the system. To prevent missing data that are required for dietary monitoring (e.g., calorie intake estimation), EZNutriPal devises an interactive operating mode that prompts the end-user to complete missing data in real-time. Additionally, we propose a combinatorial optimization approach to identify the most appropriate pairs of food names and food quantities in complex input sentences. We evaluate the performance of EZNutriPal using real data collected with 23 human subjects who participated in two user studies conducted in 13 days each. The results demonstrate that EZNutriPal achieves an accuracy of 89.7% in calorie intake estimation. We also assess the impacts of the incremental training and interactive prompting technologies on the accuracy of nutrient intake estimation and show that incremental training and interactive prompting improve the performance of diet monitoring by 49.6% and 29.1%, respectively, compared to a system without such computing units.

Proximity-Based Active Learning for Eating Moment Recognition in Wearable Systems

Detecting when eating occurs is an essential step toward automatic dietary monitoring, medication adherence assessment, and diet-related health interventions. Wearable technologies play a central role in designing unobtrusive diet monitoring solutions by leveraging machine learning algorithms that work on time-series sensor data to detect eating moments. While much research has been done on developing activity recognition and eating moment detection algorithms, the performance of the detection algorithms drops substantially when the model is utilized by a new user. To facilitate the development of personalized models, we propose PALS, Proximity-based Active Learning on Streaming data, a novel proximity-based model for recognizing eating gestures to significantly decrease the need for labeled data with new users. Our extensive analysis in both controlled and uncontrolled settings indicates F-score of PALS ranges from 22% to 39% for a budget that varies from 10 to 60 queries. Furthermore, compared to the state-of-the-art approaches, off-line PALS achieves up to 40% higher recall and 12% higher F-score in detecting eating gestures.