A large sensor foundation model pretrained on continuous glucose monitor data for diabetes management

Abstract

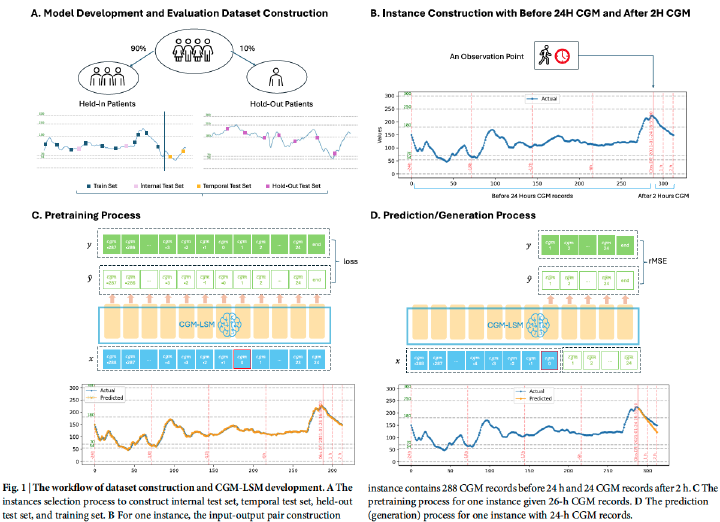

Continuous glucose monitoring (CGM) combined with AI offers new opportunities for proactive diabetes management through real-time glucose forecasting. However, most existing models are task-specific and lack generalization across patient populations. Inspired by the autoregressive paradigm of large language models, we introduce CGM-LSM, a Transformer decoder-based Large Sensor Model (LSM) pretrained on 1.6 million CGM records from patients with different diabetes types, ages, and genders. We model patients as sequences of glucose time steps to learn latent knowledge embedded in CGM data and apply it to the prediction of glucose readings for a 2-h horizon. Compared with prior methods, CGM-LSM significantly improves prediction accuracy and robustness: a 48.51% reduction in root mean square error in 1-h horizon forecasting and consistent zero-shot prediction performance across held-out patient groups. We analyze model performance variations across patient subgroups and prediction scenarios and outline key opportunities and challenges for advancing CGM foundation models.