Abstract

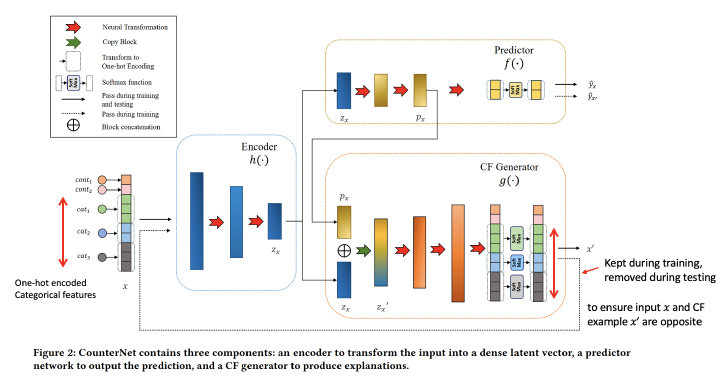

This work presents CounterNet, a novel end-to-end learning frame- work which integrates Machine Learning (ML) model training and the generation of corresponding counterfactual (CF) explanations into a single end-to-end pipeline. Counterfactual explanations offer a contrastive case, i.e., they attempt to find the smallest modifica- tion to the feature values of an instance that changes the prediction of the ML model on that instance to a predefined output. Prior techniques for generating CF explanations suffer from two ma- jor limitations- (i) all of them are post-hoc methods designed for use with proprietary ML models — as a result, their procedure for generating CF explanations is uninformed by the training of the ML model, which leads to misalignment between model pre- dictions and explanations; and (ii) most of them rely on solving separate time-intensive optimization problems to find CF expla- nations for each input data point (which negatively impacts their runtime). This work makes a novel departure from the prevalent post-hoc paradigm (of generating CF explanations) by presenting CounterNet, an end-to-end learning framework which integrates predictive model training and the generation of counterfactual (CF) explanations into a single pipeline. Unlike post-hoc methods, CounterNet enables the optimization of the CF explanation gen- eration only once together with the predictive model. We adopt a block-wise coordinate descent procedure which helps in effec- tively training CounterNet’s network. Our extensive experiments on multiple real-world datasets show that CounterNet generates high-quality predictions, and consistently achieves 100% CF valid- ity and low proximity scores (thereby achieving a well-balanced cost-invalidity trade-off) for any new input instance, and runs 3X faster than existing state-of-the-art baselines.