Towards Unifying Evaluation of Counterfactual Explanations: Leveraging Large Language Models for Human-Centric Assessments

Abstract

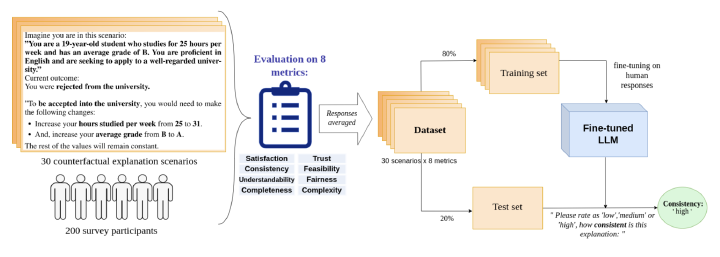

As machine learning models evolve, maintaining trans- parency demands more human-centric explainable AI tech- niques. Counterfactual explanations, with roots in human rea- soning, identify the minimal input changes needed to ob- tain a given output and, hence, are crucial for supporting decision-making. Despite their importance, the evaluation of these explanations often lacks grounding in user studies and remains fragmented, with existing metrics not fully captur- ing human perspectives. To address this challenge, we de- veloped a diverse set of 30 counterfactual scenarios and col- lected ratings across 8 evaluation metrics from 206 respon- dents. Subsequently, we fine-tuned different Large Language Models (LLMs) to predict average or individual human judg- ment across these metrics. Our methodology allowed LLMs to achieve an accuracy of up to 63% in zero-shot evaluations and 85% (over a 3-classes prediction) with fine-tuning across all metrics. The fine-tuned models predicting human ratings offer better comparability and scalability in evaluating differ- ent counterfactual explanation frameworks.